All R&D is using a combination of open-source and proprietary1 Models & LoRAs, everything runs on a single RTX 3090 GPU.

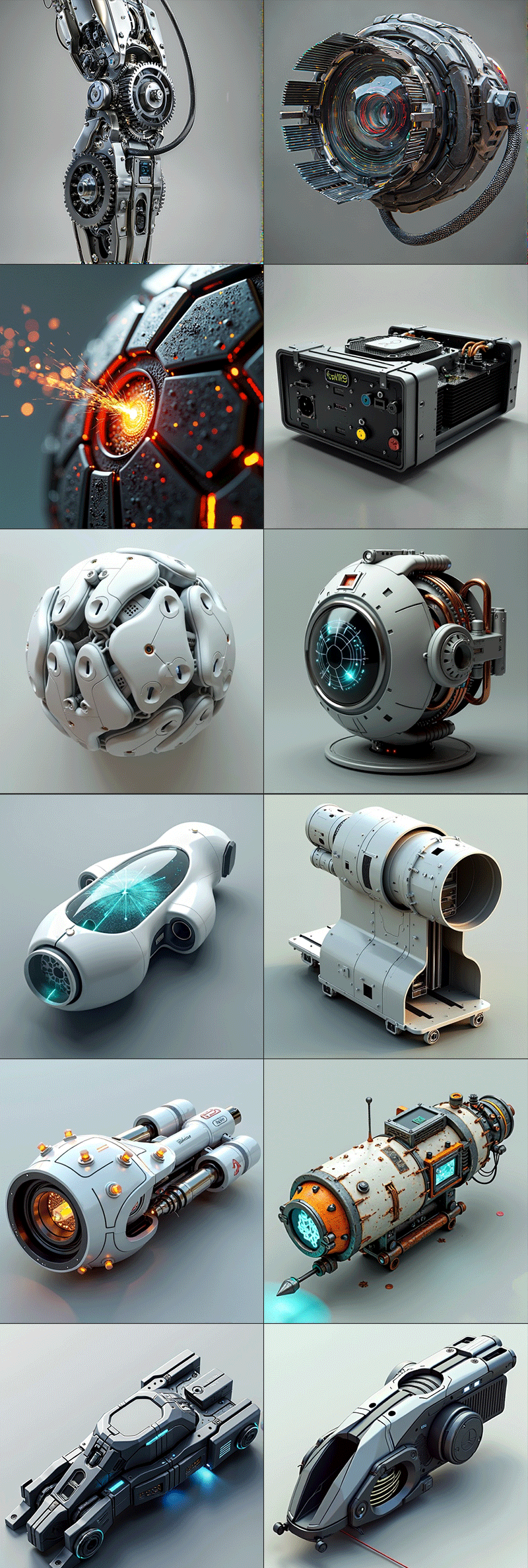

High-Fidelity Image Synthesis:

Custom diffusion models with specialized LoRas enable rapid visual ideation with precise stylistic control, generating production-ready assets at high resolutions with consistent character and detail preservation, while keeping performance as a key factor.

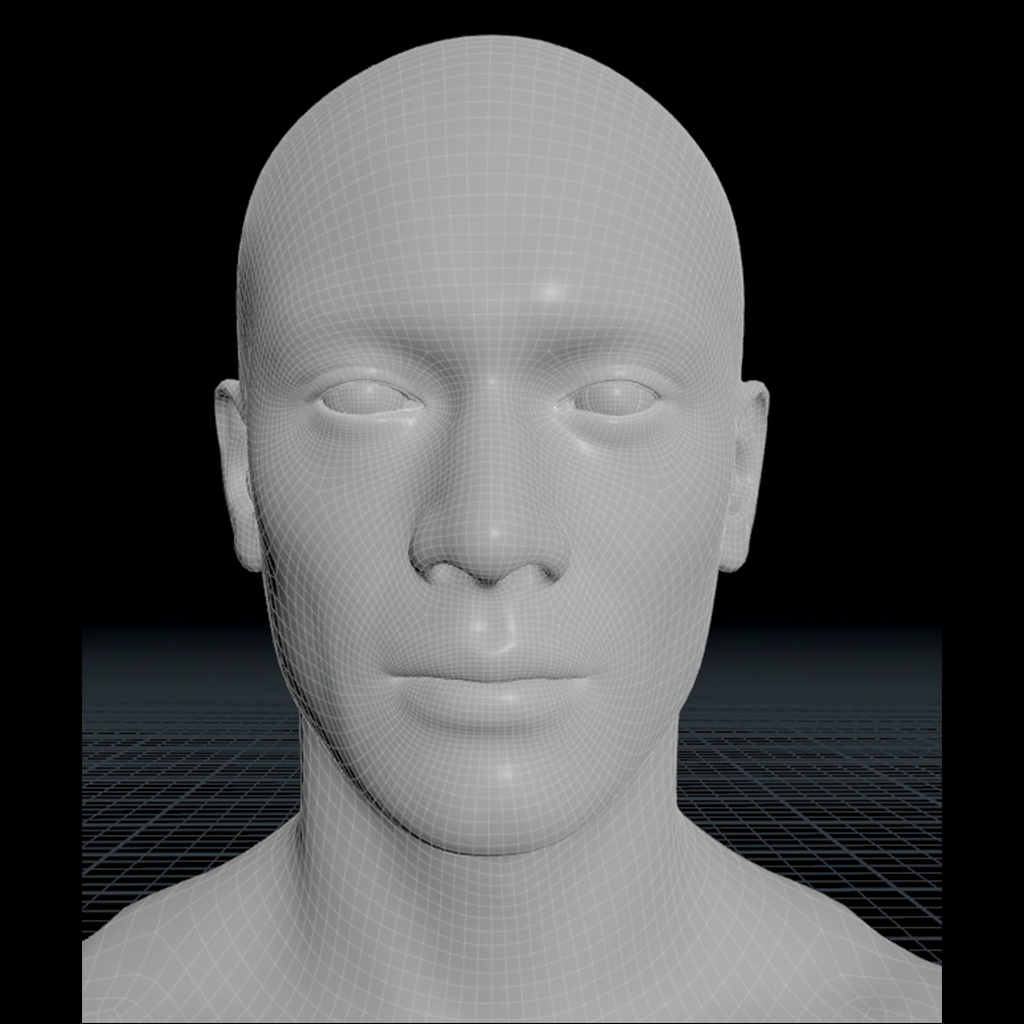

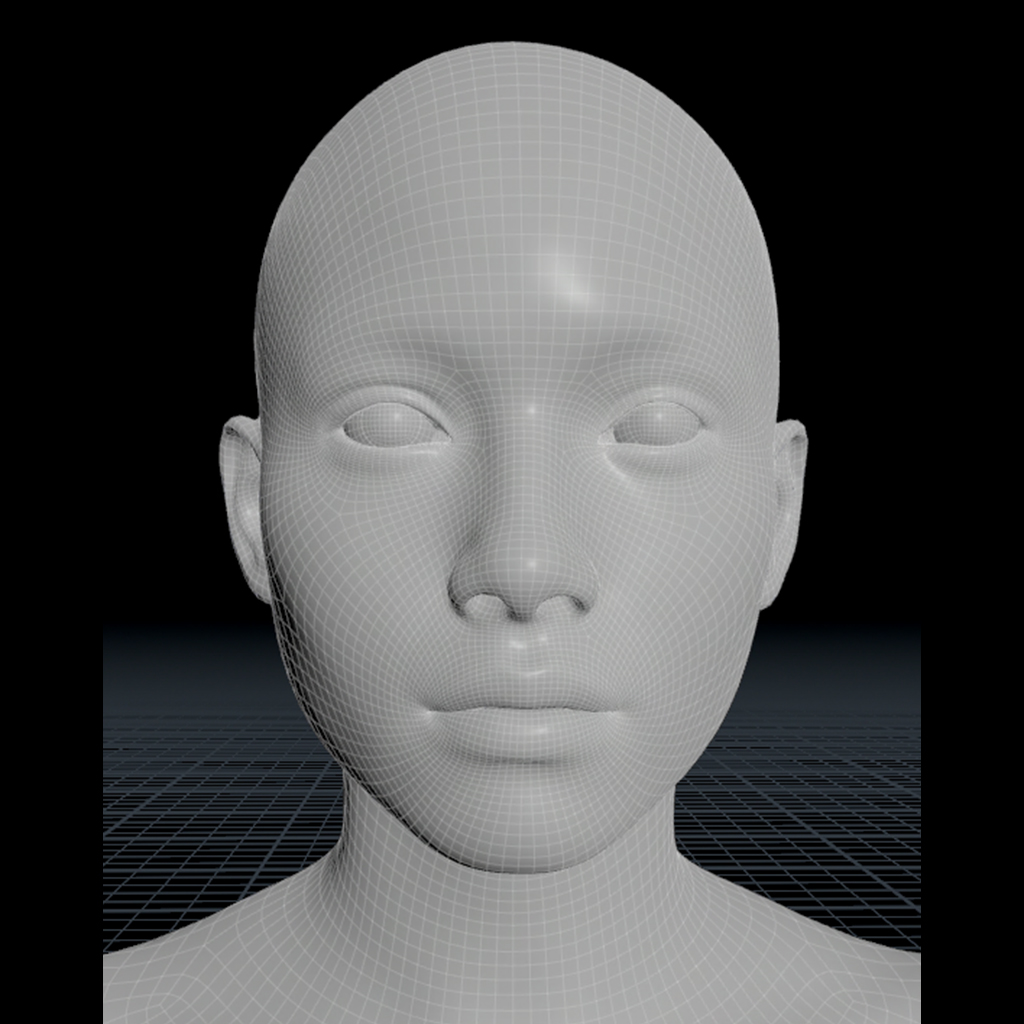

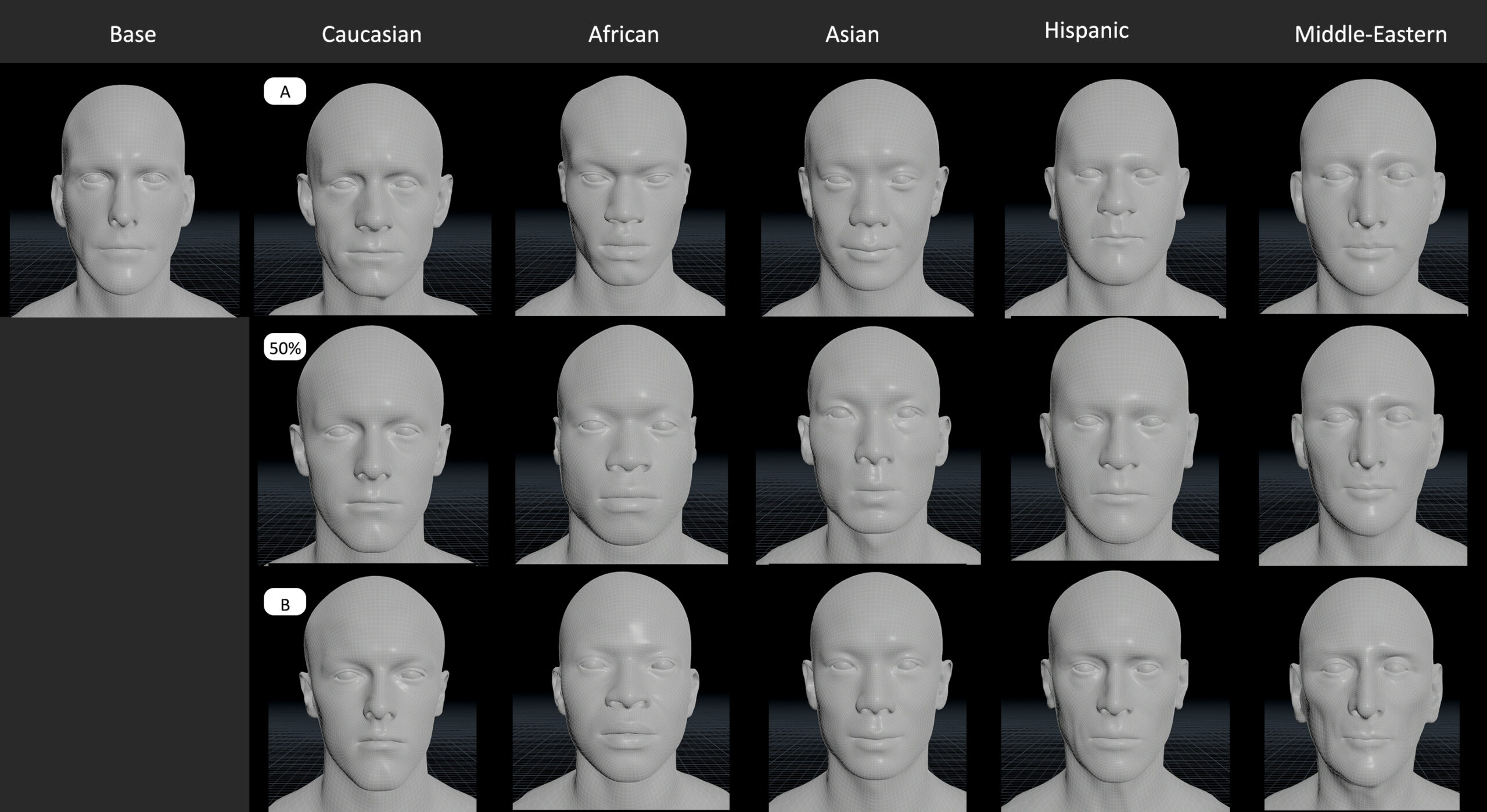

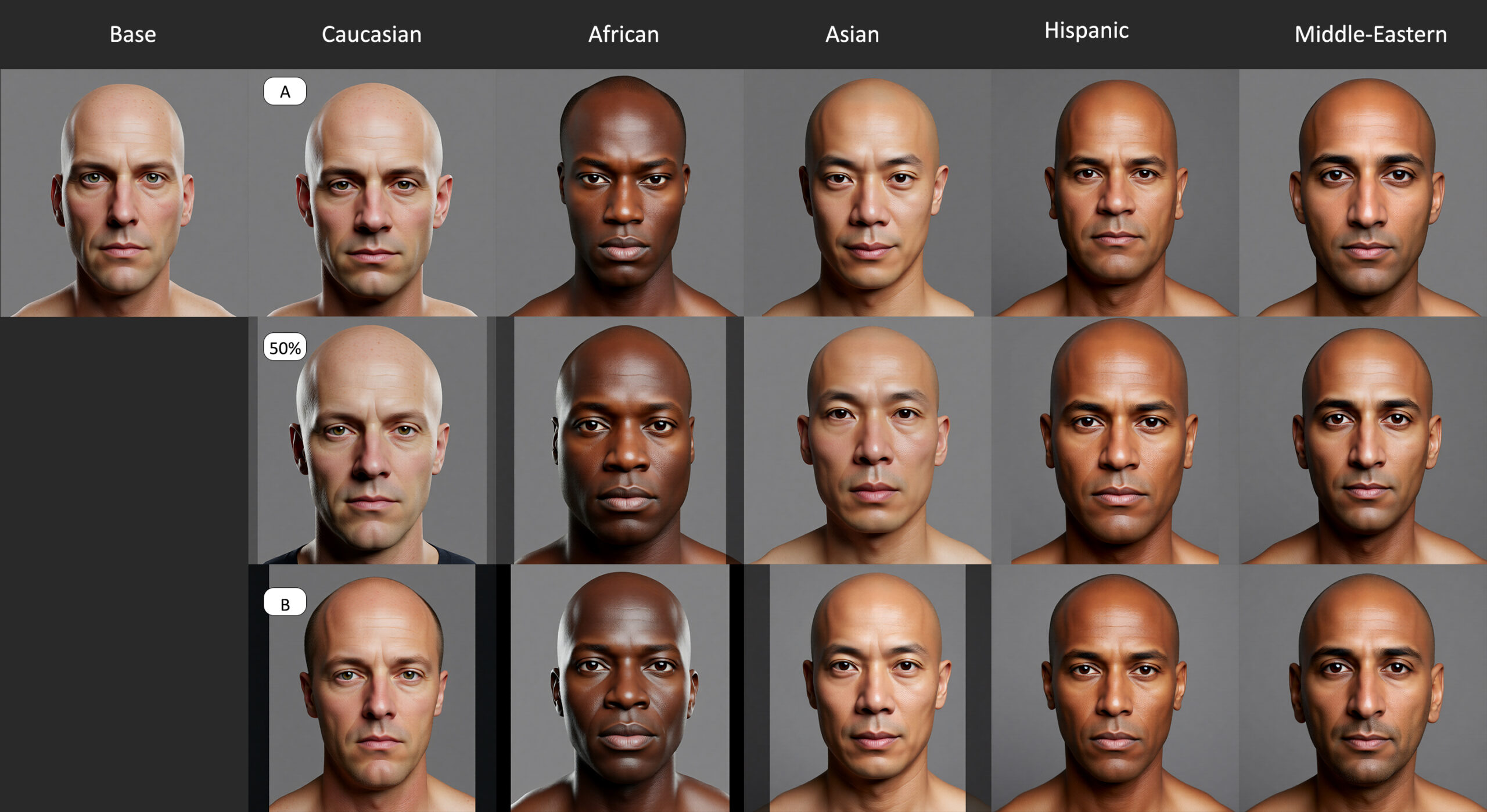

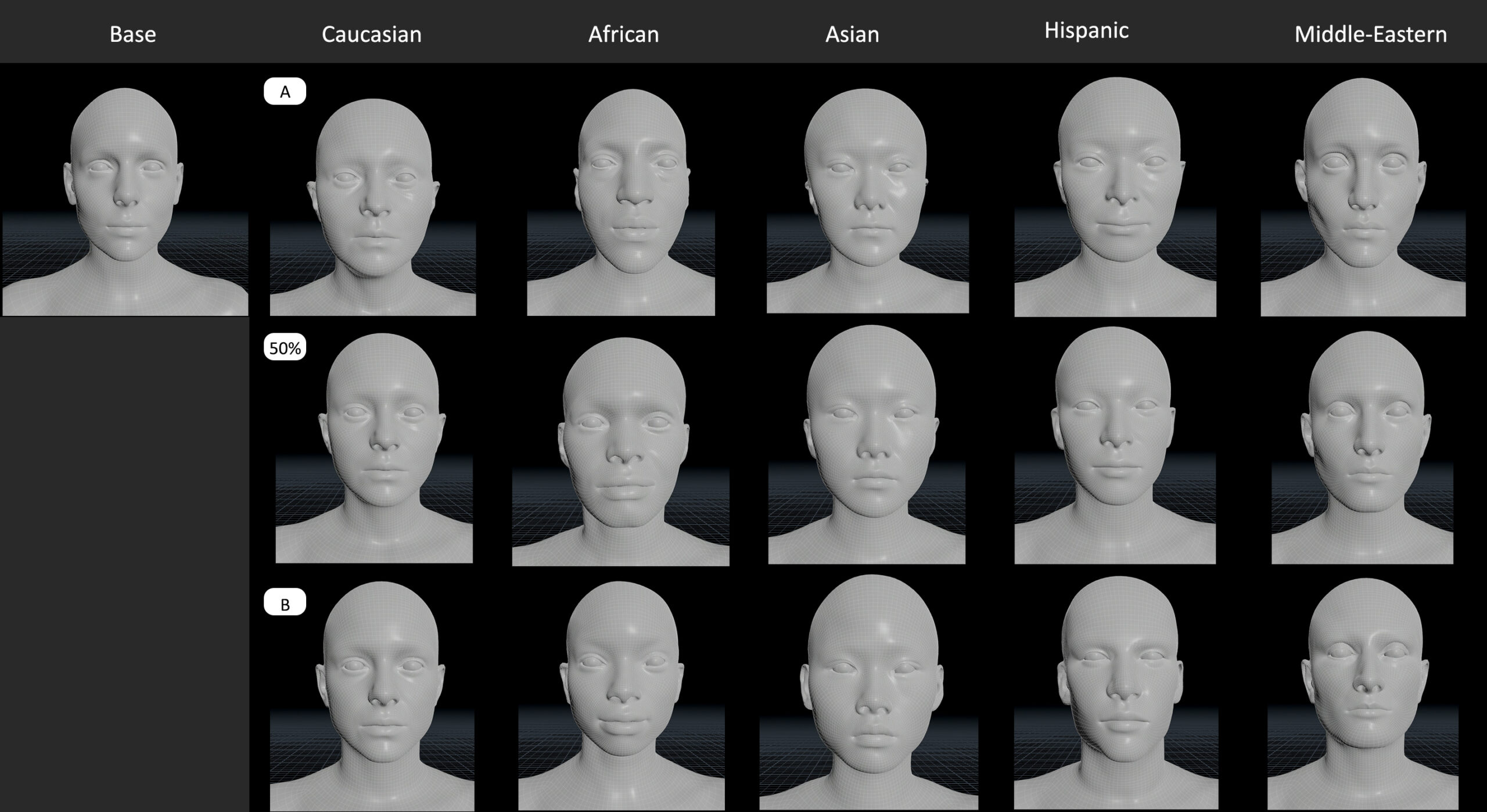

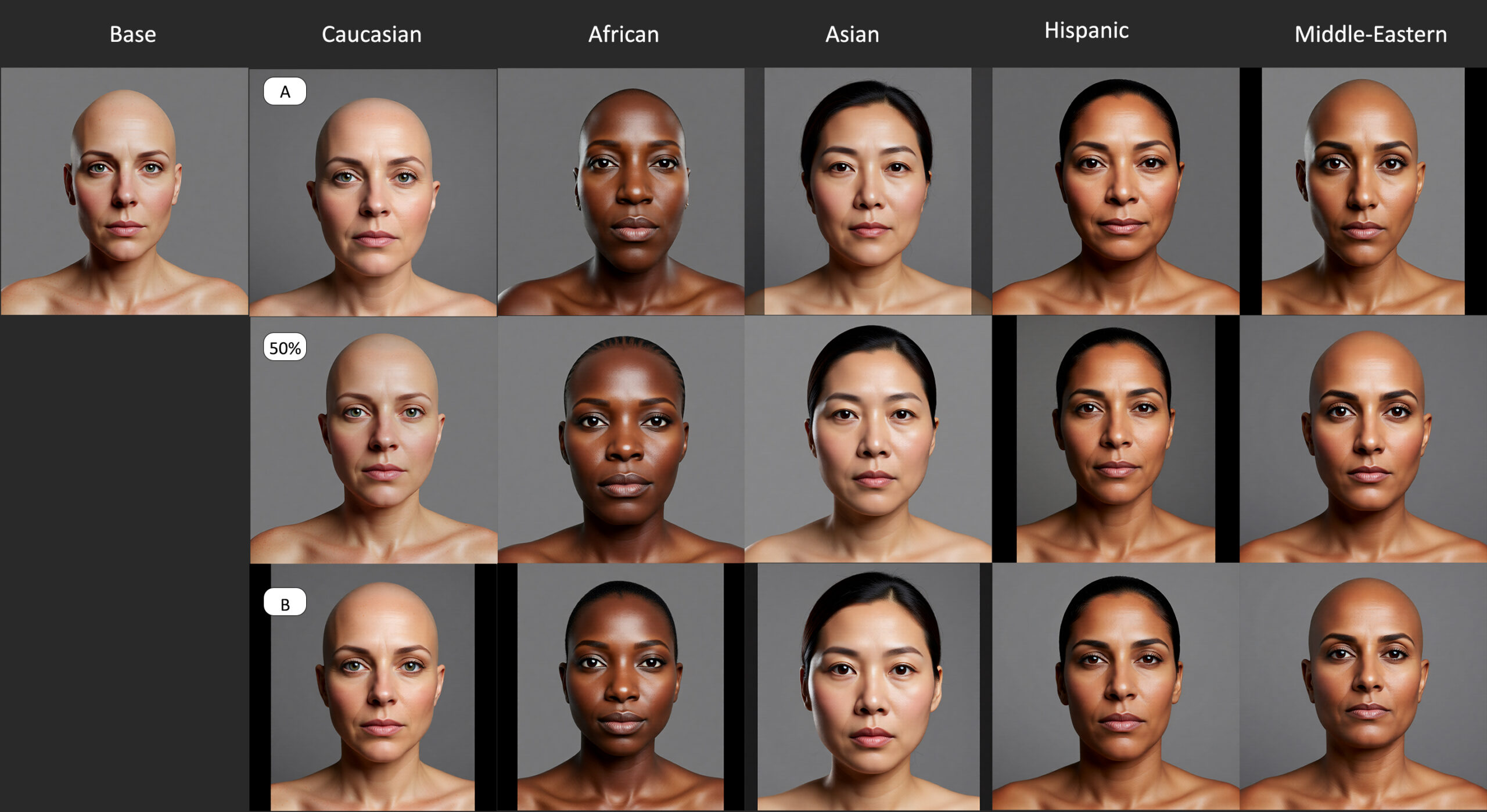

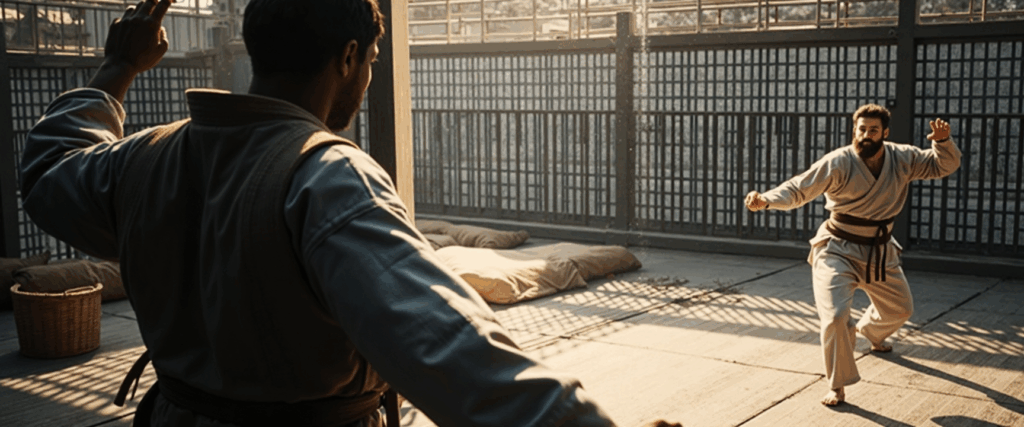

Parametrized Human FAC Generation:

A proprietary generative system produces 3D human models with consistent topology and UV mapping, that supports:

- Procedural crowd generation through parametric blending.

- Age and demographic variation across the full anthropometric spectrum.

- Likeness matching for digi-double generation.

- Direct compatibility with automated rigging and animation pipelines.

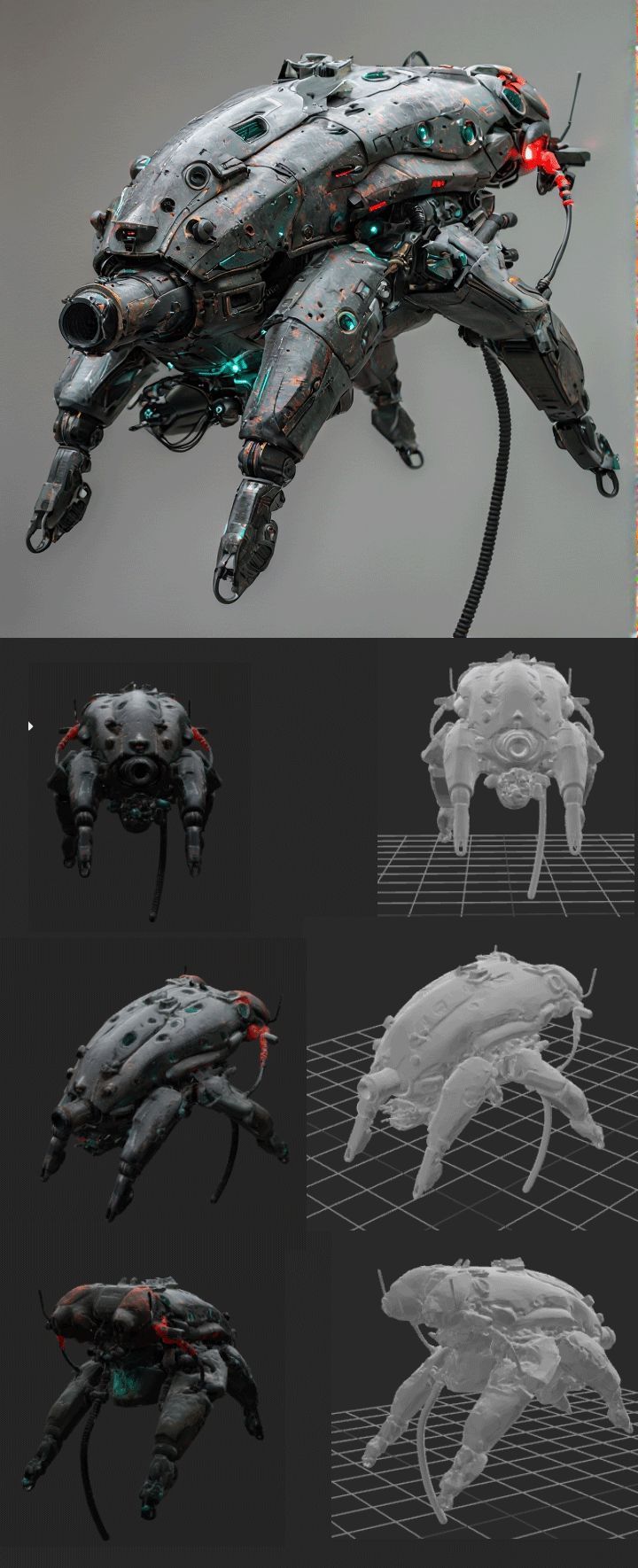

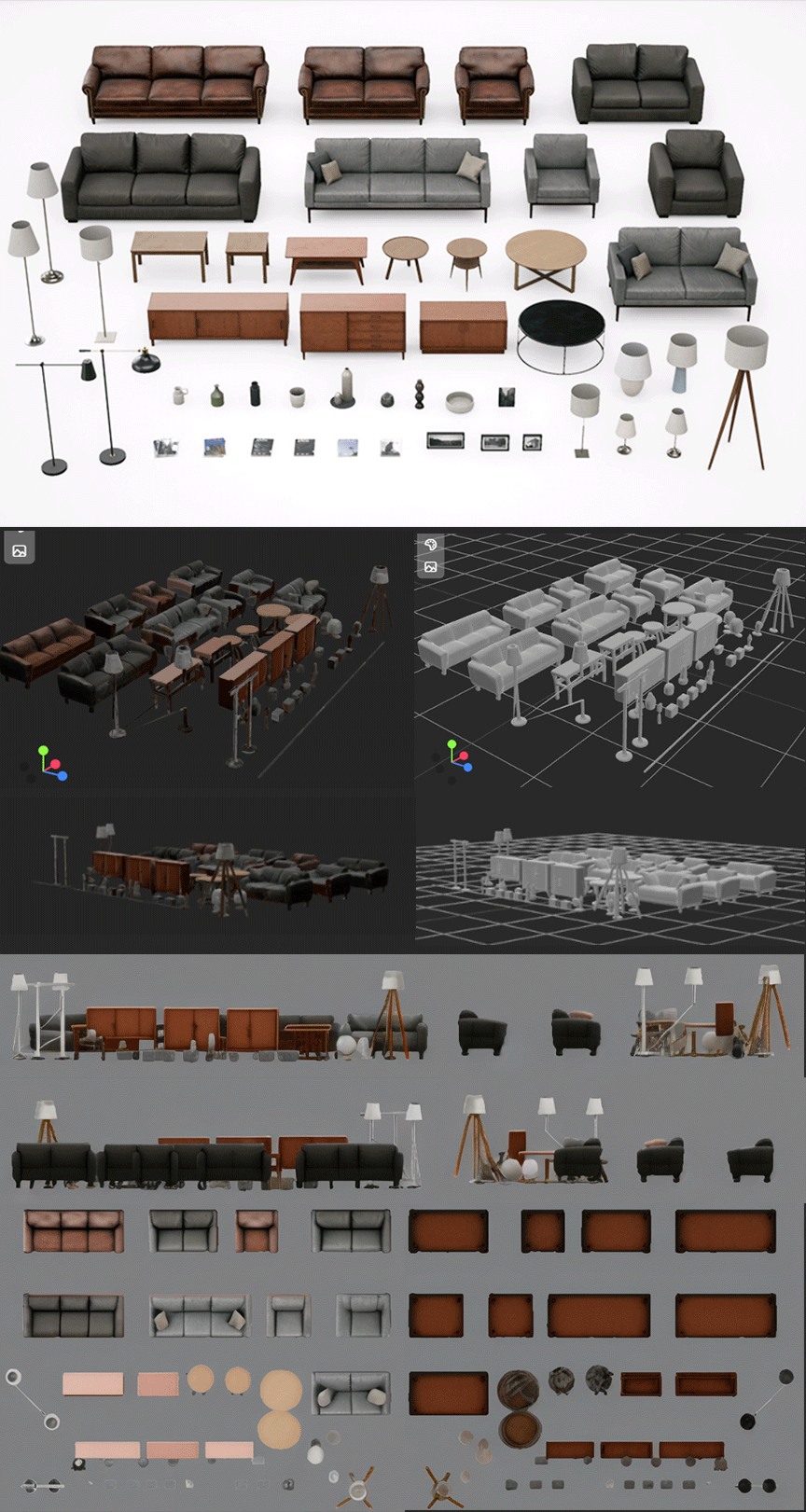

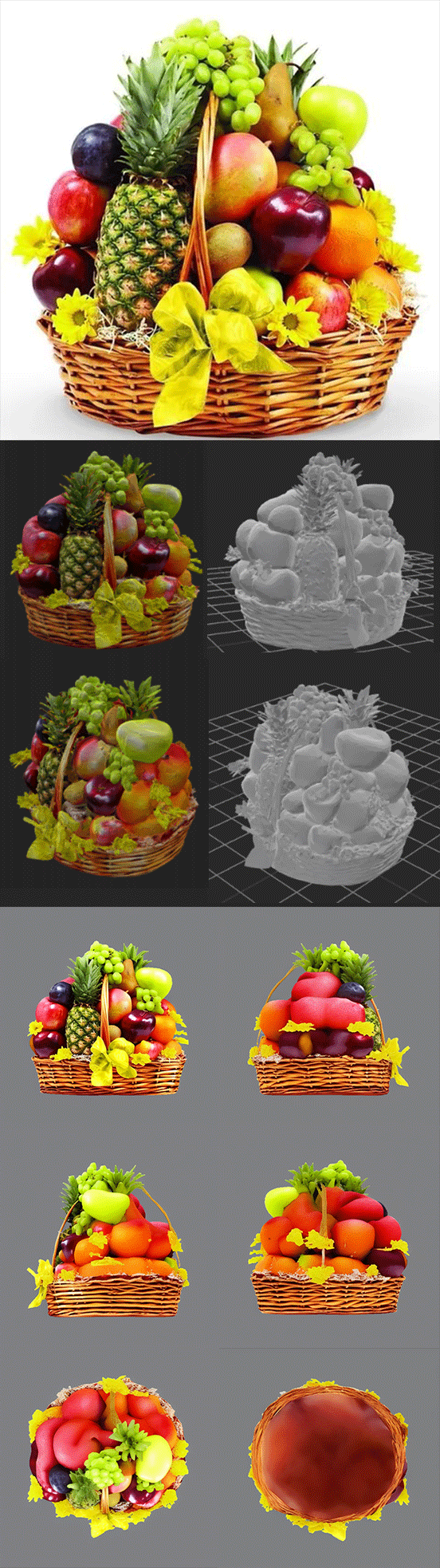

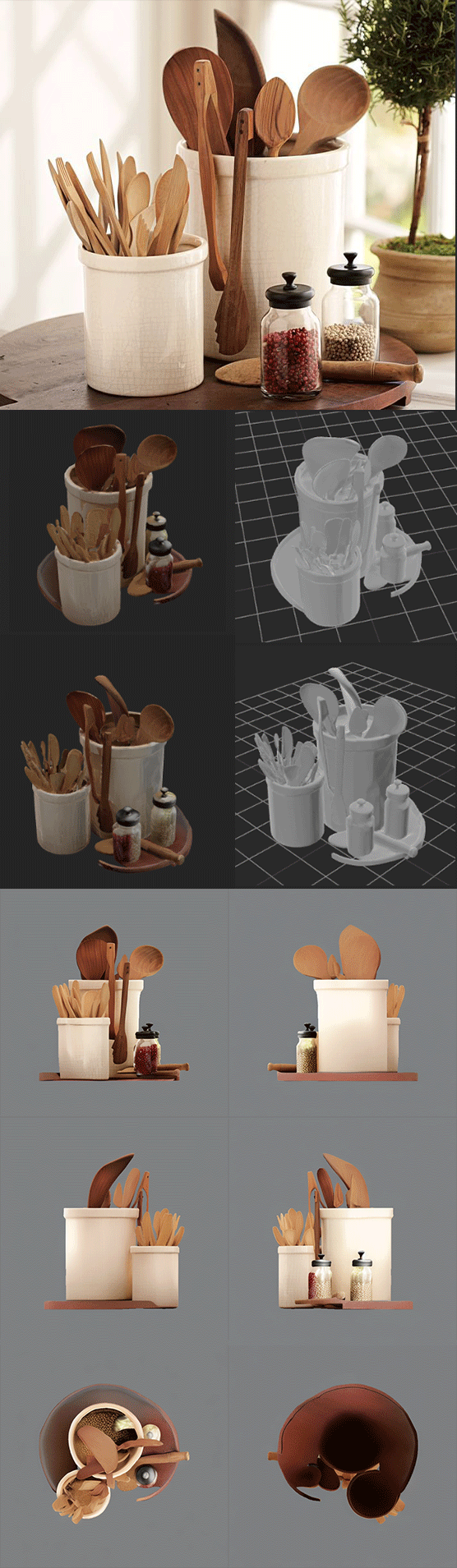

Automated Asset Creation with PBR Materials:

An end-to-end pipeline converts reference images or descriptions into production-ready 3D assets:

- Intelligent segmentation and automated prompt engineering with minimal user input.

- Complete processing: Mesh generation, UV unwrapping, and baked PBR texture maps.

- Universal format output for seamless integration into any pipeline.

Visual-LLM Powered Prompt Orchestration:

A local LLM serves as an intelligent interface between user intent and any generative model while at the same time understanding scene composition and context.

Interprets natural language into prompts and automatically optimizes parameters.

Provides standardized API abstraction across disparate model architectures.

Conducts real-time web research to incorporate latest optimization techniques and weighting strategies.

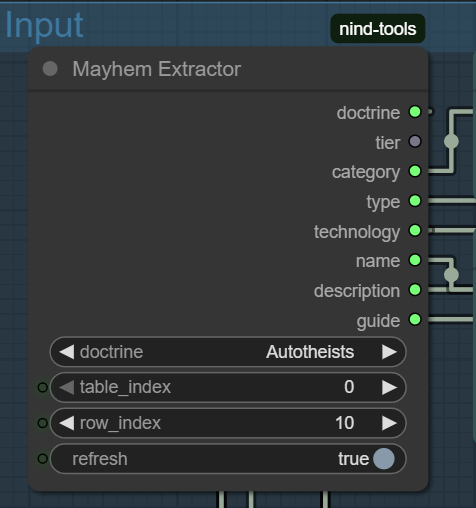

ComfyUI – Custom Nodes Example:

The “Mayhem Extractor” allows to query a vast Obsidian MD Database efficiently while maintaining extended context awareness, improving searches and database interaction, to feed into the other models for prompt engineering.

- Only hosted locally, no plans to deploy online yet. ↩︎